Today we have a cautionary tale for you about the Statistical Research Group, a group of scientists based out of Columbia University during World War II. This group did research for the Military, including work on the fuse for the atomic bomb, optimal amounts of explosives in shells, and aircraft survivability. Members included top researchers such as Milton Friedman, who later founded the “Chicago” or “Monetarist” school of economic thought and gained a Nobel Prize for his efforts (in retrospect, Monetarism was a failed attempt at moderating the Keynesian economic policies which are currently driving our economy into the ground).

The group’s greatest contribution to science was probably the invention of the field of sequential analysis, which is a statistical approach to evaluating sample data as it comes in and then stopping the analysis when statistical significance has been reached. This was developed in response to a comment by a Navy Captain, who noted that when artillery were being fired in succession in order to determine which of two versions were working better (in what amounted to very expensive and *loud* A/B tests), he knew “long before the test is done” which was the superior one.1

Abraham Wald and The Unseen Holes

However, the main part of our story involves one of the group’s members, Abraham Wald, who was given a very critical problem to work out by the military. Many bombers2 were being shot down over Germany, and it was important to figure out where the best places were on the planes to place additional armor, so their chances of survival could be increased.

As the story is told, Wald instructed the military to place a brand new bomber next to the fields where the bombers return from their bombing missions, and to have someone inspect the returning planes and paint a dot in every spot on the new plane where a hole was found on a returning plane. Over time the plane accumulated a series of painted “holes” representing holes in the fleets of returning bombers.

When enough dots accumulated, legend has it that the military assumed they should simply place the armor where the pattern of dots was the most dense.

Wald’s advice was the opposite – to put the armor where there were *the fewest* dots.

His reasoning: the holes which were *seen* were only the non-fatal holes. The holes which were *not seen* represented planes which did not return – these fatal holes were in fact located in wreckage on the ground in Germany, so they were impossible to see. So the places where the dots did *not* accumulate were the optimum places for additional armor.

Wald’s paper on this (originally published secretly, with limited distribution) was called “A Method of Estimating Plane Vulnerability Based on Damage of Survivors“, and his techniques were used in subsequent wars (and presumably continue to be used)3. In a way, this can be considered a seminal paper on search marketing.

A Very Similar Question About the Iraq War

When looking at the casualty statistics for the Iraq war, one item jumps out in particular – the injury rate is *far* higher than was the injury rate in the Vietnam War. Why can this be, surely our soldiers have better protection and medical care than they did decades ago?

The reason is very similar to our example of WWII bombers; it is precisely *because* these soldiers had better protective armor and medical care in the Iraq war. Instead of simply dying under circumstances similar to those of their predecessors, they tended instead to *make it back alive* to hospitals in an injured state. What we observed was the *seen* injuries; what we did not observe were the *unseen* deaths that never happened.

How These Examples Apply To Online Marketing

Why do I consider Wald’s paper to be a seminal paper on search marketing, even though it was published in 1943? Because in search, often what is important is *what you are not seeing*. I will give two key examples – traffic sources, and links.

Traffic Sources: Who is *Not* Coming to Your Site?

When performing keyword research, most people will look at web server logs, Google Webmaster Tools reports, and Google Analytics, but what they are not seeing is – what are the search terms for which the website is *not* receiving traffic, but should be?

A great way to augment this data in your keyword research is by using the Adwords Keyword Tool (or some other third-party keyword research tool), and also by entering cut-off snippets of phrases into Google suggest and seeing what comes back.

An example: for a recent client of mine, I did extensive keyword research based on keywords it was receiving traffic on, but I also tried typing its brand term and some other terms into Google Suggest and seeing what sorts of term completions were suggested. One of the phrases that was suggested was the term [brand] + “reviews”. When I looked, it turns out this company was not ranking for reviews of their own product at all and was receiving no traffic on it.

All this client needs do now is to throw up a “Reviews” page, which either lists, reproduces, or links to the most favorable reviews of its product; since it own the brand and has thousands of links with the brand name coming in to its site already, it will very likely rank #1 almost immediately for this term. As a result it can probably receive about 500 unique visitors a month, and will gain more control over the conversation occurring online about its products – all from identifying a brand term for which it is *not* receiving traffic.

Links: Who is *Not* Linking to Your Competitors?

Michael Martinez has a *great* recent posting called “Keeping Up With The Cloneses” on why spending a lot of time looking for linking targets by examining competitor profiles is not that useful. His key insight:

“If your competitor has obtained links from 100,000 Websites you should be focusing on the 20,000,000 domains that haven’t yet linked to him.”

Analysis is of course useful for determining the types of sites and linking strategies competitors have used in order to get ideas, but as far as the specific sources of the links, he’s absolutely right – it’s usually neither efficient or desirable to obtain links from the same sources.

Don Rumsfeld Reiterated This Concept As Being “Known Unknowns”

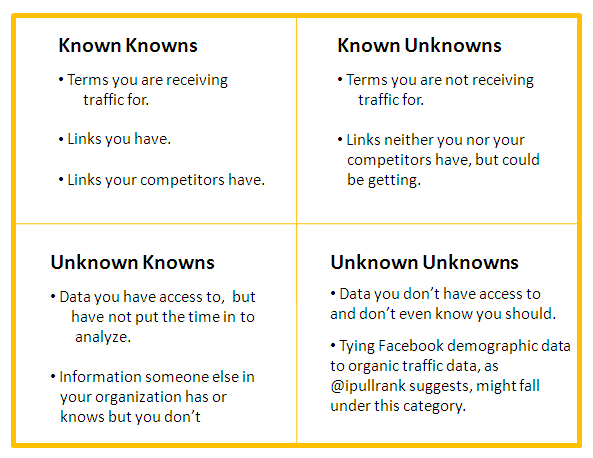

During the Iraq war, Donald Rumsfeld famously described knowledge as being in four quadrants, and the two examples we’ve given are examples of “Known Unknowns”, i.e. things that you don’t know, but you *know* you don’t know them. He didn’t hit on “Unknowns Knowns” which are those things you know but didn’t realize you knew, but his succinct summary is a pretty good one and well worth the 35 seconds it takes to watch:

Donald Rumsfeld on Unknown Unknowns

Figure 1 shows my interpretation of these four quadrants and some examples that apply in online marketing.

Unknown Unknowns

There is a very exciting new data collection and analysis technique, conceived and being evangelized by Michael King (also known as @ipullrank), that there’s good chance you’re not even aware of, and that I have placed in the “Unknown Unknowns” quadrant of figure 1.

Michael’s technique enables you to build data-driven personas by intersecting Facebook demographic data on your visitors with their keyword searches. It requires you to have a good excuse for users to log in to your website using their Facebook account (say, for a survey or game perhaps), and some JavaScript snippets in the right places, but it is not that difficult – he lays out the entire process and even provides the code for it.

As far as the value this can bring you, let’s assume you sell [widgets]. It could be, perhaps, that a huge percentage of your business is “people over 50 that that type the search phrase [widget gift]“…but you have no idea even what the right questions to ask are in order to discover this. Michael’s data-driven persona-building technique, combining Facebook and Organic Search data, holds great promise to fill in the “Unknown Unknowns” quadrant for you with segments like this.

This Approach Makes Personas *Actually Doable*

The thing I really like about this technique is, rather than the flimsy version of marketing personas that has been the rage of the last decade, it has the potential to bring all of marketing (not just online marketing) back to the days of the database-marketing driven days of the 1970′s where datasets and systems like PIMS and VALS were developed and tracked, at great expense, to describe segments of the American consumer market.

To me, the concept of personas is a great concept, but without a systematic approach, personas, of late, have felt to me sort of like the made-up ravings of marketers who are honestly trying to do a good job, but can’t be sure whether they are missing an important segment or not based on their well-intended brainstorming and research. Mike King (@ipullrank)’s concept brings data into the center of this process, in a relatively inexpensive and systematic way.

I saw a recent talk by him on this, and, unlike so many boring talks about online marketing, it absolutely *blew my mind*. Unfortunately I can’t find a video online of him presenting this material, but if you get a chance to see him present on this at one of the large search marketing shows, put his talk at the *top of your list*. If you have not seen or heard Michael’s talk on this concept already, you need to *run, not walk*, to the link below and check it out.

Past, Present, and Future of Personas in Search

Conclusion

The two key learnings we can take from these stories are:

1.) Look beyond the data in front of you and try to get to your “Known Unknowns” by using a diversity of tools and techniques to get at data that is not currently arriving at your feet on a silver platter, but you know is out there.

2.) Educate yourself about different disciplines and emerging techniques in online marketing (and beyond), because there may be additional data sources and methods of analysis that you didn’t even know existed that you could be leveraging right now; when you don’t know something, and you don’t even know that you don’t know it, there could be a big missed opportunity waiting for you.

1 “An Interview With Milton Friedman”, “Macroeconomics Dynamics, 5, 2001, 101-131

http://www.stanford.edu/~johntayl/Onlinepaperscombinedbyyear/2001/An_Interview_with_Milton_Friedman.pdf

2 Various sources on the internet and in books have this as being the B-17, the B-24, the B-25, or the B-29, and the RAF or the USAF being Wald’s “customer”. Since Wald’s paper was published in 1943, I think we can rule out the B-29. Notably, early versions of the B-17 were said to have been poorly armored, so my thinking is that the data he analyzed was either the RAF’s early B-17′s or perhaps the US Air Force’s B-17′s deployed in England in mid-1942.

3 Wikipedia notes that the Germans, who actually *had* the downed plane holes to examine, concluded that firing from behind with 20mm cannons, on average, one had to fire 1,000 rounds, hitting with an average rate of two percent, and make 20 hits in order to bring down a B-17. Later they discovered that firing from directly in front of the bomber (presumably there hadn’t been much data on this) only required 4-5 hits. If you look at page 88 of Wald’s paper (page 97 in the PDF), he calls out a 20-mm cannon shell strike on the engine as having the highest probability of taking down the bomber, with the #2 scenario being a 20-mm cannon shell strike on the cockpit. One wonders if Wald’s “hypothetical” data he reference in the paper was perhaps the actual data!